5KBZIP

The title "test_212_python_crawler_wos_database_" indicates that this is a project about web crawler development using the Python programming language, with the goal of obtaining literature data from the WOS (Web of Science) database.The WOS database is a widely academic literature retrieval platform used in scientific research, containing a large number of citation indexes for science, technology, and social sciences from around the world.

A few key points mentioned in the description are as follows:

1. **Crawling all records of a WOS literature database query result**: this typically involves using Python's web request libraries, such as `requests`, to send an HTTP request to WOS's API interface to fetch and parse the returned data.WOS databases may require API keys or query-specific parameters to fetch a valid result, and thus the crawler needs to be able to handle these authentication and parameter settings.

2. **Prepared query terms for automated query crawling**: This means that the program needs to have user input functionality to receive query keywords and use them to construct appropriate query requests. In addition, automated crawling may involve loops or timed tasks, e.g., using `while` loops or `schedule` libraries to execute the crawler at regular intervals.

3. **Source code operation, no GUI panel yet**: Currently this crawler project is command line interface operation, no graphical user interface (GUI). Users need to enter commands via the command line to run and control the crawler. Creating a GUI would make it easier for users with a non-programming background, and can usually be achieved using libraries such as `tkinter`, `PyQt` or `wxPython`.

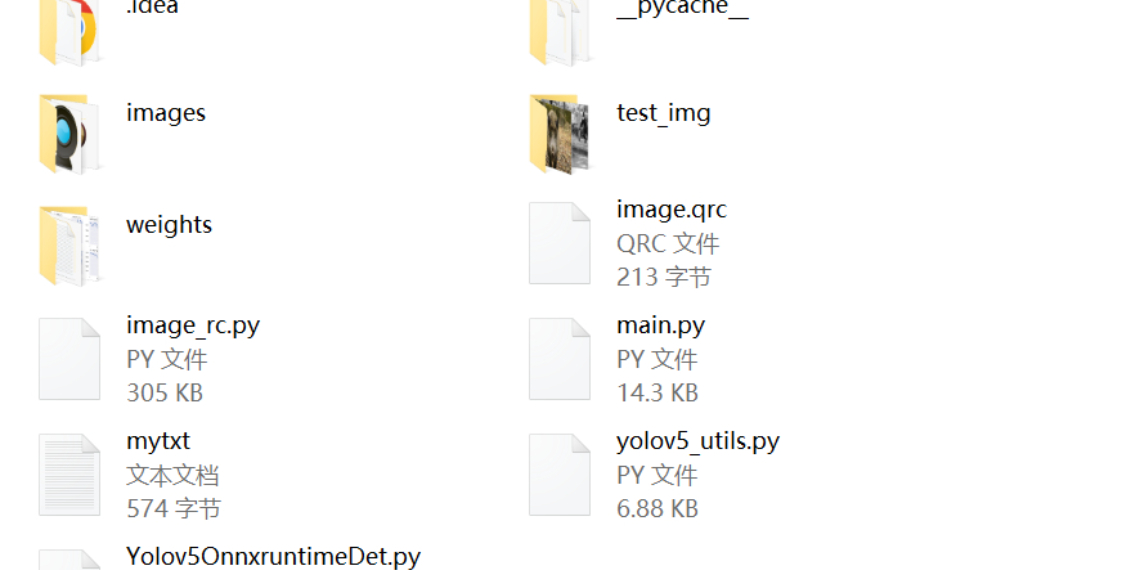

In the list of file names, there is only one file "test_212.py", which is probably the main code file of the crawler. In this file, we can expect to find the following core knowledge points:

- **HTTP requests**: use of the `requests` library, such as `requests.get()` or `requests.post()`, to interact with the WOS API.

- **Data parsing**: Use libraries such as `BeautifulSoup` or `lxml` to parse the returned HTML or XML data and extract the required information.

- **Loops and Conditional Judgments**: `for` loops may be used to traverse query results during crawling, as well as `if` statements to handle the results of different requests.

- **Exception Handling**: In order to cope with possible network errors, API limitations, etc., a `try-except` structure is used to catch and handle exceptions.

- **File manipulation**: may contain code to read and write files, such as `open()` and `write()`, to save the crawled data locally.

- **API call management**: may include logic to handle limits on the number of API calls, setting delays to avoid being blocked by the server.

- **Parameterized query**: dynamically constructs a query request based on a query term entered by the user.

- **Data storage**: JSON, CSV or other data formats may be used to save crawled literature information.

Since there is no specific code content, the above analysis is based on the general process and common design of the general crawler project. The actual "test_212.py" file may contain these or more details, the specific implementation will vary according to the developer's strategy and needs. In order to understand this project in depth, you need to view the source code and analyze it further.

Resource Disclaimer (Purchase is deemed to be agreement with this statement): 1. Any operation on the website platform is considered to have read and agreed to the registration agreement and disclaimer at the bottom of the website, this site resources have been ultra-low price, and does not provide technical support 2. Some network users share the net disk address may be invalid, such as the occurrence of failure, please send an e-mail to customer service code711cn#qq.com (# replaced by @) will be made up to send 3. This site provides all downloadable resources (software, etc.) site to ensure that no negative changes; but this site can not guarantee the accuracy, security and integrity of the resources, the user downloads at their own discretion, we communicate to learn for the purpose of not all the source code is not 100% error-free or no bugs; you need to have a certain foundation to be able to read and understand the code, be able to modify the debugging yourself! code and solve the error. At the same time, users of this site must understand that the Source Code Convenience Store does not own any rights to the software provided for download, the copyright belongs to the legal owner of the resource. 4. All resources on this site only for learning and research purposes, please must be deleted within 24 hours of the downloaded resources, do not use for commercial purposes, otherwise the legal disputes arising from the site and the publisher of the collateral liability site and will not be borne! 5. Due to the reproducible nature of the resources, once purchased are non-refundable, the recharge balance is also non-refundable